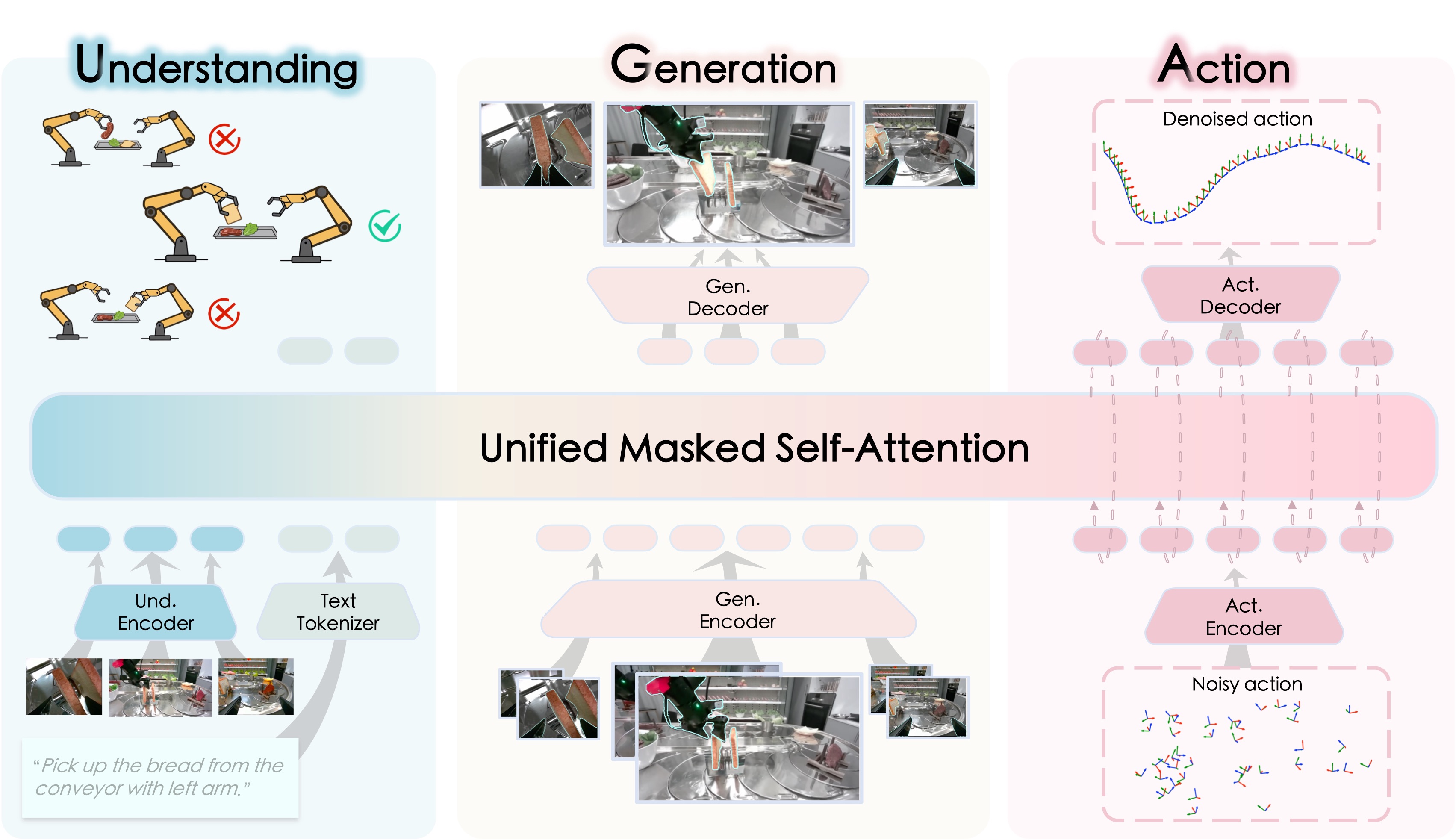

InternVLA-A1 unifies scene understanding, visual foresight generation, and action execution into a single framework.

- 🔮 The Core: Synergizes MLLM's semantic understanding with world-model-style dynamic prediction, enabling it to "imagine" the future and guide adaptive actions.

- 🚀 The Fuel: Empowered by high-fidelity synthetic data (InternData-A1).

- ⚡ The Output: Tackles highly dynamic scenarios with effortless mastery.

InternVLA-A1 delivers superior performance across both real-world deployments and simulation benchmarks.

- Real-World: Robust execution across 12 diverse tasks, including dynamic scenarios such as conveyor belt sorting.

express_sorting.mp4 |

parcel_handling.mp4 |

Overcooked.mp4 |

sort_parts.mp4 |

zig_bag.mp4 |

unscrew_cap.mp4 |

- Simulation: State-of-the-art results on RoboTwin 2.0 Benchmark (averaged over 50 tasks)

| Metric | InternVLA-A1-3B | ||

|---|---|---|---|

| Avg. Success (Easy) | 79.98% | 84.70% | 88.30% 🥇 |

| Avg. Success (Hard) | 79.50% | 85.02% | 88.48% 🥇 |

- [2026/01/23] InternVLA-A1-3B achieves State-of-The-Art result on RoboTwin 2.0 benchmark! We have released the finetuned model InternVLA-A1-3B-RoboTwin on 🤗 HuggingFace.

- [2026/01/14] We release the Pre-training code and guidelines of InternVLA-A1-3B.

- [2026/01/07] We released our paper on arXiv.

- [2026/01/05] We release the InternVLA-A1 codebase (for LeRobot V3.0 ecosystem).

- Release InternVLA-A1-3B

- Release fine-tuning code on downstream tasks

- Release pretraining code on large-scale dataset

- Release InternVLA-A1-2B

This repository has been tested on Python 3.10, CUDA 12.8 and PyTorch 2.7.1. We recommend using conda to create an isolated environment.

Please refer to Installation Tutorial to prepare your environment.

bash launch/internvla_a1_3b_finetune.sh lerobot/pusht abs falseHere, abs indicates using absolute actions, and false means that the training

script will use the statistics file (stats.json) provided by lerobot/pusht itself.

Please refer to the Pre-training Tutorial for instructions on pretraining InternVLA-A1-3B with the InternData-A1 dataset.

Please refer to the LeRobot V2.1 Fine-tuning Tutorial to finetune InternVLA-A1-3B with real-world datasets in the LeRobot V2.1 format. This guide walks you through the complete pipeline: Download Dataset → Convert to v3.0 Format → Fine-tune on Pick-Pen Task

Benchmark InternVLA-A1-3B: RoboTwin 2.0 Finetune Tutorial | RoboTwin 2.0 Eval Tutorial.

Please refer to Evaluation Guideline for the complete inference and evaluation workflow for InternVLA-A1-3B.

All the code within this repo are under CC BY-NC-SA 4.0. Please consider citing our project if it helps your research.

@article{contributors2026internvla_a1,

title={InternVLA-A1: Unifying Understanding, Generation and Action for Robotic Manipulation},

author={InternVLA-A1 contributors},

journal={arXiv preprint arXiv:2601.02456},

year={2026}

}