@@ -18,7 +21,7 @@ specific language governing permissions and limitations under the License.

## Overview

-[PIA: Your Personalized Image Animator via Plug-and-Play Modules in Text-to-Image Models](https://arxiv.org/abs/2312.13964) by Yiming Zhang, Zhening Xing, Yanhong Zeng, Youqing Fang, Kai Chen

+[PIA: Your Personalized Image Animator via Plug-and-Play Modules in Text-to-Image Models](https://huggingface.co/papers/2312.13964) by Yiming Zhang, Zhening Xing, Yanhong Zeng, Youqing Fang, Kai Chen

Recent advancements in personalized text-to-image (T2I) models have revolutionized content creation, empowering non-experts to generate stunning images with unique styles. While promising, adding realistic motions into these personalized images by text poses significant challenges in preserving distinct styles, high-fidelity details, and achieving motion controllability by text. In this paper, we present PIA, a Personalized Image Animator that excels in aligning with condition images, achieving motion controllability by text, and the compatibility with various personalized T2I models without specific tuning. To achieve these goals, PIA builds upon a base T2I model with well-trained temporal alignment layers, allowing for the seamless transformation of any personalized T2I model into an image animation model. A key component of PIA is the introduction of the condition module, which utilizes the condition frame and inter-frame affinity as input to transfer appearance information guided by the affinity hint for individual frame synthesis in the latent space. This design mitigates the challenges of appearance-related image alignment within and allows for a stronger focus on aligning with motion-related guidance.

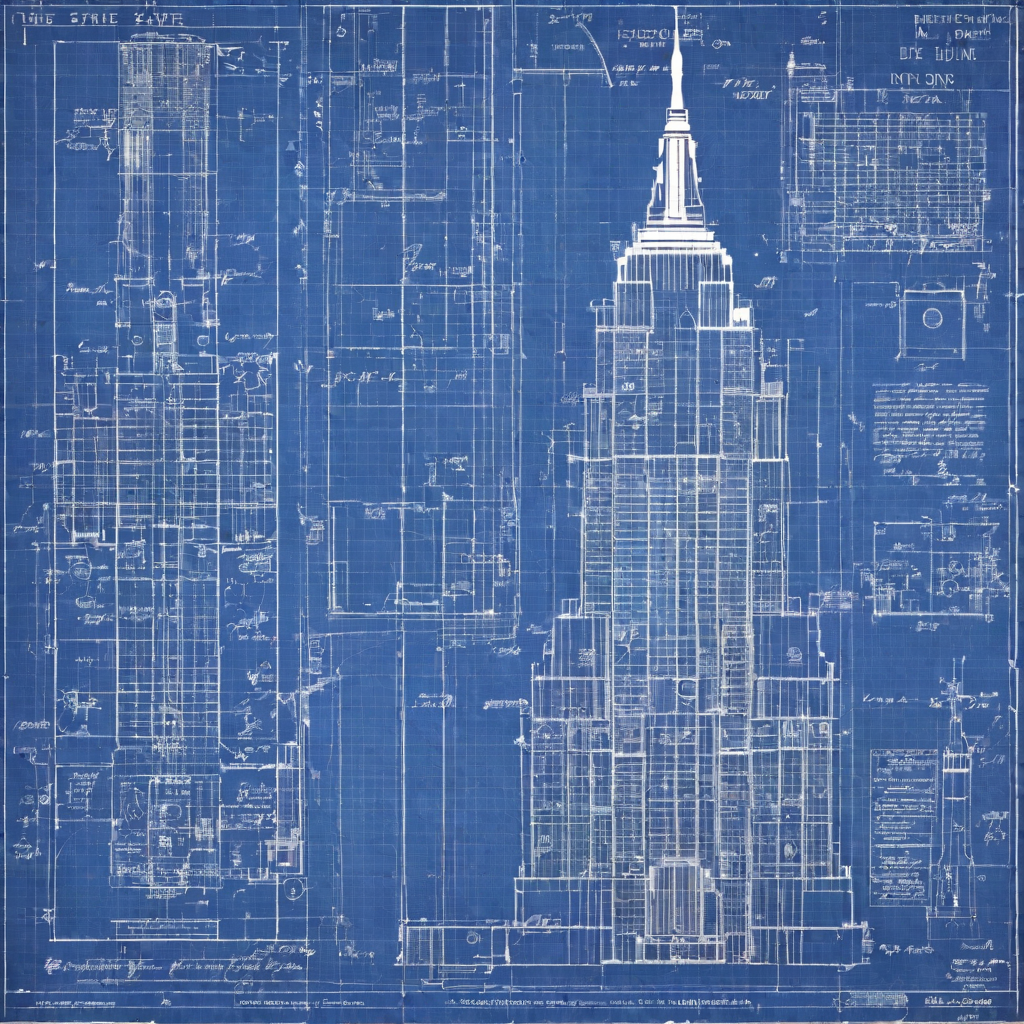

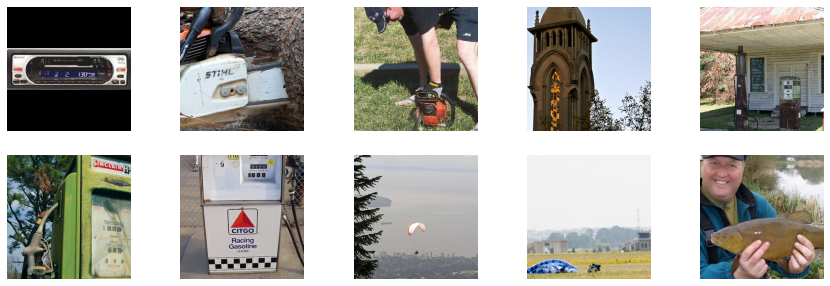

@@ -84,15 +87,12 @@ Here are some sample outputs:

-

+> [!TIP]

+> If you plan on using a scheduler that can clip samples, make sure to disable it by setting `clip_sample=False` in the scheduler as this can also have an adverse effect on generated samples. Additionally, the PIA checkpoints can be sensitive to the beta schedule of the scheduler. We recommend setting this to `linear`.

## Using FreeInit

-[FreeInit: Bridging Initialization Gap in Video Diffusion Models](https://arxiv.org/abs/2312.07537) by Tianxing Wu, Chenyang Si, Yuming Jiang, Ziqi Huang, Ziwei Liu.

+[FreeInit: Bridging Initialization Gap in Video Diffusion Models](https://huggingface.co/papers/2312.07537) by Tianxing Wu, Chenyang Si, Yuming Jiang, Ziqi Huang, Ziwei Liu.

FreeInit is an effective method that improves temporal consistency and overall quality of videos generated using video-diffusion-models without any addition training. It can be applied to PIA, AnimateDiff, ModelScope, VideoCrafter and various other video generation models seamlessly at inference time, and works by iteratively refining the latent-initialization noise. More details can be found it the paper.

@@ -146,11 +146,8 @@ export_to_gif(frames, "pia-freeinit-animation.gif")

-

+> [!WARNING]

+> FreeInit is not really free - the improved quality comes at the cost of extra computation. It requires sampling a few extra times depending on the `num_iters` parameter that is set when enabling it. Setting the `use_fast_sampling` parameter to `True` can improve the overall performance (at the cost of lower quality compared to when `use_fast_sampling=False` but still better results than vanilla video generation models).

## PIAPipeline

diff --git a/docs/source/en/api/pipelines/pix2pix.md b/docs/source/en/api/pipelines/pix2pix.md

index d0b3bf32b823..84eb0cb5e5d3 100644

--- a/docs/source/en/api/pipelines/pix2pix.md

+++ b/docs/source/en/api/pipelines/pix2pix.md

@@ -1,4 +1,4 @@

-

+

+# PRX

+

+

+PRX generates high-quality images from text using a simplified MMDIT architecture where text tokens don't update through transformer blocks. It employs flow matching with discrete scheduling for efficient sampling and uses Google's T5Gemma-2B-2B-UL2 model for multi-language text encoding. The ~1.3B parameter transformer delivers fast inference without sacrificing quality. You can choose between Flux VAE (8x compression, 16 latent channels) for balanced quality and speed or DC-AE (32x compression, 32 latent channels) for latent compression and faster processing.

+

+## Available models

+

+PRX offers multiple variants with different VAE configurations, each optimized for specific resolutions. Base models excel with detailed prompts, capturing complex compositions and subtle details. Fine-tuned models trained on the [Alchemist dataset](https://huggingface.co/datasets/yandex/alchemist) improve aesthetic quality, especially with simpler prompts.

+

+

+| Model | Resolution | Fine-tuned | Distilled | Description | Suggested prompts | Suggested parameters | Recommended dtype |

+|:-----:|:-----------------:|:----------:|:----------:|:----------:|:----------:|:----------:|:----------:|

+| [`Photoroom/prx-256-t2i`](https://huggingface.co/Photoroom/prx-256-t2i)| 256 | No | No | Base model pre-trained at 256 with Flux VAE|Works best with detailed prompts in natural language|28 steps, cfg=5.0| `torch.bfloat16` |

+| [`Photoroom/prx-256-t2i-sft`](https://huggingface.co/Photoroom/prx-256-t2i-sft)| 512 | Yes | No | Fine-tuned on the [Alchemist dataset](https://huggingface.co/datasets/yandex/alchemist) dataset with Flux VAE | Can handle less detailed prompts|28 steps, cfg=5.0| `torch.bfloat16` |

+| [`Photoroom/prx-512-t2i`](https://huggingface.co/Photoroom/prx-512-t2i)| 512 | No | No | Base model pre-trained at 512 with Flux VAE |Works best with detailed prompts in natural language|28 steps, cfg=5.0| `torch.bfloat16` |

+| [`Photoroom/prx-512-t2i-sft`](https://huggingface.co/Photoroom/prx-512-t2i-sft)| 512 | Yes | No | Fine-tuned on the [Alchemist dataset](https://huggingface.co/datasets/yandex/alchemist) dataset with Flux VAE | Can handle less detailed prompts in natural language|28 steps, cfg=5.0| `torch.bfloat16` |

+| [`Photoroom/prx-512-t2i-sft-distilled`](https://huggingface.co/Photoroom/prx-512-t2i-sft-distilled)| 512 | Yes | Yes | 8-step distilled model from [`Photoroom/prx-512-t2i-sft`](https://huggingface.co/Photoroom/prx-512-t2i-sft) | Can handle less detailed prompts in natural language|8 steps, cfg=1.0| `torch.bfloat16` |

+| [`Photoroom/prx-512-t2i-dc-ae`](https://huggingface.co/Photoroom/prx-512-t2i-dc-ae)| 512 | No | No | Base model pre-trained at 512 with [Deep Compression Autoencoder (DC-AE)](https://hanlab.mit.edu/projects/dc-ae)|Works best with detailed prompts in natural language|28 steps, cfg=5.0| `torch.bfloat16` |

+| [`Photoroom/prx-512-t2i-dc-ae-sft`](https://huggingface.co/Photoroom/prx-512-t2i-dc-ae-sft)| 512 | Yes | No | Fine-tuned on the [Alchemist dataset](https://huggingface.co/datasets/yandex/alchemist) dataset with [Deep Compression Autoencoder (DC-AE)](https://hanlab.mit.edu/projects/dc-ae) | Can handle less detailed prompts in natural language|28 steps, cfg=5.0| `torch.bfloat16` |

+| [`Photoroom/prx-512-t2i-dc-ae-sft-distilled`](https://huggingface.co/Photoroom/prx-512-t2i-dc-ae-sft-distilled)| 512 | Yes | Yes | 8-step distilled model from [`Photoroom/prx-512-t2i-dc-ae-sft-distilled`](https://huggingface.co/Photoroom/prx-512-t2i-dc-ae-sft-distilled) | Can handle less detailed prompts in natural language|8 steps, cfg=1.0| `torch.bfloat16` |s

+

+Refer to [this](https://huggingface.co/collections/Photoroom/prx-models-68e66254c202ebfab99ad38e) collection for more information.

+

+## Loading the pipeline

+

+Load the pipeline with [`~DiffusionPipeline.from_pretrained`].

+

+```py

+from diffusers.pipelines.prx import PRXPipeline

+

+# Load pipeline - VAE and text encoder will be loaded from HuggingFace

+pipe = PRXPipeline.from_pretrained("Photoroom/prx-512-t2i-sft", torch_dtype=torch.bfloat16)

+pipe.to("cuda")

+

+prompt = "A front-facing portrait of a lion the golden savanna at sunset."

+image = pipe(prompt, num_inference_steps=28, guidance_scale=5.0).images[0]

+image.save("prx_output.png")

+```

+

+### Manual Component Loading

+

+Load components individually to customize the pipeline for instance to use quantized models.

+

+```py

+import torch

+from diffusers.pipelines.prx import PRXPipeline

+from diffusers.models import AutoencoderKL, AutoencoderDC

+from diffusers.models.transformers.transformer_prx import PRXTransformer2DModel

+from diffusers.schedulers import FlowMatchEulerDiscreteScheduler

+from transformers import T5GemmaModel, GemmaTokenizerFast

+from diffusers import BitsAndBytesConfig as DiffusersBitsAndBytesConfig

+from transformers import BitsAndBytesConfig as BitsAndBytesConfig

+

+quant_config = DiffusersBitsAndBytesConfig(load_in_8bit=True)

+# Load transformer

+transformer = PRXTransformer2DModel.from_pretrained(

+ "checkpoints/prx-512-t2i-sft",

+ subfolder="transformer",

+ quantization_config=quant_config,

+ torch_dtype=torch.bfloat16,

+)

+

+# Load scheduler

+scheduler = FlowMatchEulerDiscreteScheduler.from_pretrained(

+ "checkpoints/prx-512-t2i-sft", subfolder="scheduler"

+)

+

+# Load T5Gemma text encoder

+t5gemma_model = T5GemmaModel.from_pretrained("google/t5gemma-2b-2b-ul2",

+ quantization_config=quant_config,

+ torch_dtype=torch.bfloat16)

+text_encoder = t5gemma_model.encoder.to(dtype=torch.bfloat16)

+tokenizer = GemmaTokenizerFast.from_pretrained("google/t5gemma-2b-2b-ul2")

+tokenizer.model_max_length = 256

+

+# Load VAE - choose either Flux VAE or DC-AE

+# Flux VAE

+vae = AutoencoderKL.from_pretrained("black-forest-labs/FLUX.1-dev",

+ subfolder="vae",

+ quantization_config=quant_config,

+ torch_dtype=torch.bfloat16)

+

+pipe = PRXPipeline(

+ transformer=transformer,

+ scheduler=scheduler,

+ text_encoder=text_encoder,

+ tokenizer=tokenizer,

+ vae=vae

+)

+pipe.to("cuda")

+```

+

+

+## Memory Optimization

+

+For memory-constrained environments:

+

+```py

+import torch

+from diffusers.pipelines.prx import PRXPipeline

+

+pipe = PRXPipeline.from_pretrained("Photoroom/prx-512-t2i-sft", torch_dtype=torch.bfloat16)

+pipe.enable_model_cpu_offload() # Offload components to CPU when not in use

+

+# Or use sequential CPU offload for even lower memory

+pipe.enable_sequential_cpu_offload()

+```

+

+## PRXPipeline

+

+[[autodoc]] PRXPipeline

+ - all

+ - __call__

+

+## PRXPipelineOutput

+

+[[autodoc]] pipelines.prx.pipeline_output.PRXPipelineOutput

diff --git a/docs/source/en/api/pipelines/qwenimage.md b/docs/source/en/api/pipelines/qwenimage.md

new file mode 100644

index 000000000000..b3dd3dd93618

--- /dev/null

+++ b/docs/source/en/api/pipelines/qwenimage.md

@@ -0,0 +1,161 @@

+

+

+# QwenImage

+

+

+

+Qwen-Image from the Qwen team is an image generation foundation model in the Qwen series that achieves significant advances in complex text rendering and precise image editing. Experiments show strong general capabilities in both image generation and editing, with exceptional performance in text rendering, especially for Chinese.

+

+Qwen-Image comes in the following variants:

+

+| model type | model id |

+|:----------:|:--------:|

+| Qwen-Image | [`Qwen/Qwen-Image`](https://huggingface.co/Qwen/Qwen-Image) |

+| Qwen-Image-Edit | [`Qwen/Qwen-Image-Edit`](https://huggingface.co/Qwen/Qwen-Image-Edit) |

+| Qwen-Image-Edit Plus | [Qwen/Qwen-Image-Edit-2509](https://huggingface.co/Qwen/Qwen-Image-Edit-2509) |

+

+> [!TIP]

+> [Caching](../../optimization/cache) may also speed up inference by storing and reusing intermediate outputs.

+

+## LoRA for faster inference

+

+Use a LoRA from `lightx2v/Qwen-Image-Lightning` to speed up inference by reducing the

+number of steps. Refer to the code snippet below:

+

+

+

+> [!TIP]

+> The `guidance_scale` parameter in the pipeline is there to support future guidance-distilled models when they come up. Note that passing `guidance_scale` to the pipeline is ineffective. To enable classifier-free guidance, please pass `true_cfg_scale` and `negative_prompt` (even an empty negative prompt like " ") should enable classifier-free guidance computations.

+

+## Multi-image reference with QwenImageEditPlusPipeline

+

+With [`QwenImageEditPlusPipeline`], one can provide multiple images as input reference.

+

+```

+import torch

+from PIL import Image

+from diffusers import QwenImageEditPlusPipeline

+from diffusers.utils import load_image

+

+pipe = QwenImageEditPlusPipeline.from_pretrained(

+ "Qwen/Qwen-Image-Edit-2509", torch_dtype=torch.bfloat16

+).to("cuda")

+

+image_1 = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/grumpy.jpg")

+image_2 = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/peng.png")

+image = pipe(

+ image=[image_1, image_2],

+ prompt='''put the penguin and the cat at a game show called "Qwen Edit Plus Games"''',

+ num_inference_steps=50

+).images[0]

+```

+

+## QwenImagePipeline

+

+[[autodoc]] QwenImagePipeline

+ - all

+ - __call__

+

+## QwenImageImg2ImgPipeline

+

+[[autodoc]] QwenImageImg2ImgPipeline

+ - all

+ - __call__

+

+## QwenImageInpaintPipeline

+

+[[autodoc]] QwenImageInpaintPipeline

+ - all

+ - __call__

+

+## QwenImageEditPipeline

+

+[[autodoc]] QwenImageEditPipeline

+ - all

+ - __call__

+

+## QwenImageEditInpaintPipeline

+

+[[autodoc]] QwenImageEditInpaintPipeline

+ - all

+ - __call__

+

+## QwenImageControlNetPipeline

+

+[[autodoc]] QwenImageControlNetPipeline

+ - all

+ - __call__

+

+## QwenImageEditPlusPipeline

+

+[[autodoc]] QwenImageEditPlusPipeline

+ - all

+ - __call__

+

+## QwenImagePipelineOutput

+

+[[autodoc]] pipelines.qwenimage.pipeline_output.QwenImagePipelineOutput

\ No newline at end of file

diff --git a/docs/source/en/api/pipelines/sana.md b/docs/source/en/api/pipelines/sana.md

index 3702b2771974..a948620f96cb 100644

--- a/docs/source/en/api/pipelines/sana.md

+++ b/docs/source/en/api/pipelines/sana.md

@@ -1,4 +1,4 @@

-

-# SanaSprintPipeline

+# SANA-Sprint

@@ -24,12 +24,6 @@ The abstract from the paper is:

*This paper presents SANA-Sprint, an efficient diffusion model for ultra-fast text-to-image (T2I) generation. SANA-Sprint is built on a pre-trained foundation model and augmented with hybrid distillation, dramatically reducing inference steps from 20 to 1-4. We introduce three key innovations: (1) We propose a training-free approach that transforms a pre-trained flow-matching model for continuous-time consistency distillation (sCM), eliminating costly training from scratch and achieving high training efficiency. Our hybrid distillation strategy combines sCM with latent adversarial distillation (LADD): sCM ensures alignment with the teacher model, while LADD enhances single-step generation fidelity. (2) SANA-Sprint is a unified step-adaptive model that achieves high-quality generation in 1-4 steps, eliminating step-specific training and improving efficiency. (3) We integrate ControlNet with SANA-Sprint for real-time interactive image generation, enabling instant visual feedback for user interaction. SANA-Sprint establishes a new Pareto frontier in speed-quality tradeoffs, achieving state-of-the-art performance with 7.59 FID and 0.74 GenEval in only 1 step — outperforming FLUX-schnell (7.94 FID / 0.71 GenEval) while being 10× faster (0.1s vs 1.1s on H100). It also achieves 0.1s (T2I) and 0.25s (ControlNet) latency for 1024×1024 images on H100, and 0.31s (T2I) on an RTX 4090, showcasing its exceptional efficiency and potential for AI-powered consumer applications (AIPC). Code and pre-trained models will be open-sourced.*

-

-

-Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

-

-

-

This pipeline was contributed by [lawrence-cj](https://github.com/lawrence-cj), [shuchen Xue](https://github.com/scxue) and [Enze Xie](https://github.com/xieenze). The original codebase can be found [here](https://github.com/NVlabs/Sana). The original weights can be found under [hf.co/Efficient-Large-Model](https://huggingface.co/Efficient-Large-Model/).

Available models:

@@ -88,12 +82,46 @@ image.save("sana.png")

Users can tweak the `max_timesteps` value for experimenting with the visual quality of the generated outputs. The default `max_timesteps` value was obtained with an inference-time search process. For more details about it, check out the paper.

+## Image to Image

+

+The [`SanaSprintImg2ImgPipeline`] is a pipeline for image-to-image generation. It takes an input image and a prompt, and generates a new image based on the input image and the prompt.

+

+```py

+import torch

+from diffusers import SanaSprintImg2ImgPipeline

+from diffusers.utils.loading_utils import load_image

+

+image = load_image(

+ "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/penguin.png"

+)

+

+pipe = SanaSprintImg2ImgPipeline.from_pretrained(

+ "Efficient-Large-Model/Sana_Sprint_1.6B_1024px_diffusers",

+ torch_dtype=torch.bfloat16)

+pipe.to("cuda")

+

+image = pipe(

+ prompt="a cute pink bear",

+ image=image,

+ strength=0.5,

+ height=832,

+ width=480

+).images[0]

+image.save("output.png")

+```

+

## SanaSprintPipeline

[[autodoc]] SanaSprintPipeline

- all

- __call__

+## SanaSprintImg2ImgPipeline

+

+[[autodoc]] SanaSprintImg2ImgPipeline

+ - all

+ - __call__

+

## SanaPipelineOutput

diff --git a/docs/source/en/api/pipelines/sana_video.md b/docs/source/en/api/pipelines/sana_video.md

new file mode 100644

index 000000000000..9e330c758318

--- /dev/null

+++ b/docs/source/en/api/pipelines/sana_video.md

@@ -0,0 +1,189 @@

+

+

+# Sana-Video

+

+

+

+

+

+

+[SANA-Video: Efficient Video Generation with Block Linear Diffusion Transformer](https://huggingface.co/papers/2509.24695) from NVIDIA and MIT HAN Lab, by Junsong Chen, Yuyang Zhao, Jincheng Yu, Ruihang Chu, Junyu Chen, Shuai Yang, Xianbang Wang, Yicheng Pan, Daquan Zhou, Huan Ling, Haozhe Liu, Hongwei Yi, Hao Zhang, Muyang Li, Yukang Chen, Han Cai, Sanja Fidler, Ping Luo, Song Han, Enze Xie.

+

+The abstract from the paper is:

+

+*We introduce SANA-Video, a small diffusion model that can efficiently generate videos up to 720x1280 resolution and minute-length duration. SANA-Video synthesizes high-resolution, high-quality and long videos with strong text-video alignment at a remarkably fast speed, deployable on RTX 5090 GPU. Two core designs ensure our efficient, effective and long video generation: (1) Linear DiT: We leverage linear attention as the core operation, which is more efficient than vanilla attention given the large number of tokens processed in video generation. (2) Constant-Memory KV cache for Block Linear Attention: we design block-wise autoregressive approach for long video generation by employing a constant-memory state, derived from the cumulative properties of linear attention. This KV cache provides the Linear DiT with global context at a fixed memory cost, eliminating the need for a traditional KV cache and enabling efficient, minute-long video generation. In addition, we explore effective data filters and model training strategies, narrowing the training cost to 12 days on 64 H100 GPUs, which is only 1% of the cost of MovieGen. Given its low cost, SANA-Video achieves competitive performance compared to modern state-of-the-art small diffusion models (e.g., Wan 2.1-1.3B and SkyReel-V2-1.3B) while being 16x faster in measured latency. Moreover, SANA-Video can be deployed on RTX 5090 GPUs with NVFP4 precision, accelerating the inference speed of generating a 5-second 720p video from 71s to 29s (2.4x speedup). In summary, SANA-Video enables low-cost, high-quality video generation. [this https URL](https://github.com/NVlabs/SANA).*

+

+This pipeline was contributed by SANA Team. The original codebase can be found [here](https://github.com/NVlabs/Sana). The original weights can be found under [hf.co/Efficient-Large-Model](https://hf.co/collections/Efficient-Large-Model/sana-video).

+

+Available models:

+

+| Model | Recommended dtype |

+|:-----:|:-----------------:|

+| [`Efficient-Large-Model/SANA-Video_2B_480p_diffusers`](https://huggingface.co/Efficient-Large-Model/ANA-Video_2B_480p_diffusers) | `torch.bfloat16` |

+

+Refer to [this](https://huggingface.co/collections/Efficient-Large-Model/sana-video) collection for more information.

+

+Note: The recommended dtype mentioned is for the transformer weights. The text encoder and VAE weights must stay in `torch.bfloat16` or `torch.float32` for the model to work correctly. Please refer to the inference example below to see how to load the model with the recommended dtype.

+

+

+## Generation Pipelines

+

+

`

+

+

+The example below demonstrates how to use the text-to-video pipeline to generate a video using a text description.

+

+```python

+pipe = SanaVideoPipeline.from_pretrained(

+ "Efficient-Large-Model/SANA-Video_2B_480p_diffusers",

+ torch_dtype=torch.bfloat16,

+)

+pipe.text_encoder.to(torch.bfloat16)

+pipe.vae.to(torch.float32)

+pipe.to("cuda")

+

+prompt = "A cat and a dog baking a cake together in a kitchen. The cat is carefully measuring flour, while the dog is stirring the batter with a wooden spoon. The kitchen is cozy, with sunlight streaming through the window."

+negative_prompt = "A chaotic sequence with misshapen, deformed limbs in heavy motion blur, sudden disappearance, jump cuts, jerky movements, rapid shot changes, frames out of sync, inconsistent character shapes, temporal artifacts, jitter, and ghosting effects, creating a disorienting visual experience."

+motion_scale = 30

+motion_prompt = f" motion score: {motion_scale}."

+prompt = prompt + motion_prompt

+

+video = pipe(

+ prompt=prompt,

+ negative_prompt=negative_prompt,

+ height=480,

+ width=832,

+ frames=81,

+ guidance_scale=6,

+ num_inference_steps=50,

+ generator=torch.Generator(device="cuda").manual_seed(0),

+).frames[0]

+

+export_to_video(video, "sana_video.mp4", fps=16)

+```

+

+

+

+

+The example below demonstrates how to use the image-to-video pipeline to generate a video using a text description and a starting frame.

+

+```python

+pipe = SanaImageToVideoPipeline.from_pretrained(

+ "Efficient-Large-Model/SANA-Video_2B_480p_diffusers",

+ torch_dtype=torch.bfloat16,

+)

+pipe.scheduler = FlowMatchEulerDiscreteScheduler.from_config(pipe.scheduler.config, flow_shift=8.0)

+pipe.vae.to(torch.float32)

+pipe.text_encoder.to(torch.bfloat16)

+pipe.to("cuda")

+

+image = load_image("https://raw.githubusercontent.com/NVlabs/Sana/refs/heads/main/asset/samples/i2v-1.png")

+prompt = "A woman stands against a stunning sunset backdrop, her long, wavy brown hair gently blowing in the breeze. She wears a sleeveless, light-colored blouse with a deep V-neckline, which accentuates her graceful posture. The warm hues of the setting sun cast a golden glow across her face and hair, creating a serene and ethereal atmosphere. The background features a blurred landscape with soft, rolling hills and scattered clouds, adding depth to the scene. The camera remains steady, capturing the tranquil moment from a medium close-up angle."

+negative_prompt = "A chaotic sequence with misshapen, deformed limbs in heavy motion blur, sudden disappearance, jump cuts, jerky movements, rapid shot changes, frames out of sync, inconsistent character shapes, temporal artifacts, jitter, and ghosting effects, creating a disorienting visual experience."

+motion_scale = 30

+motion_prompt = f" motion score: {motion_scale}."

+prompt = prompt + motion_prompt

+

+motion_scale = 30.0

+

+video = pipe(

+ image=image,

+ prompt=prompt,

+ negative_prompt=negative_prompt,

+ height=480,

+ width=832,

+ frames=81,

+ guidance_scale=6,

+ num_inference_steps=50,

+ generator=torch.Generator(device="cuda").manual_seed(0),

+).frames[0]

+

+export_to_video(video, "sana-i2v.mp4", fps=16)

+```

+

+

+

+

+

+## Quantization

+

+Quantization helps reduce the memory requirements of very large models by storing model weights in a lower precision data type. However, quantization may have varying impact on video quality depending on the video model.

+

+Refer to the [Quantization](../../quantization/overview) overview to learn more about supported quantization backends and selecting a quantization backend that supports your use case. The example below demonstrates how to load a quantized [`SanaVideoPipeline`] for inference with bitsandbytes.

+

+```py

+import torch

+from diffusers import BitsAndBytesConfig as DiffusersBitsAndBytesConfig, SanaVideoTransformer3DModel, SanaVideoPipeline

+from transformers import BitsAndBytesConfig as BitsAndBytesConfig, AutoModel

+

+quant_config = BitsAndBytesConfig(load_in_8bit=True)

+text_encoder_8bit = AutoModel.from_pretrained(

+ "Efficient-Large-Model/SANA-Video_2B_480p_diffusers",

+ subfolder="text_encoder",

+ quantization_config=quant_config,

+ torch_dtype=torch.float16,

+)

+

+quant_config = DiffusersBitsAndBytesConfig(load_in_8bit=True)

+transformer_8bit = SanaVideoTransformer3DModel.from_pretrained(

+ "Efficient-Large-Model/SANA-Video_2B_480p_diffusers",

+ subfolder="transformer",

+ quantization_config=quant_config,

+ torch_dtype=torch.float16,

+)

+

+pipeline = SanaVideoPipeline.from_pretrained(

+ "Efficient-Large-Model/SANA-Video_2B_480p_diffusers",

+ text_encoder=text_encoder_8bit,

+ transformer=transformer_8bit,

+ torch_dtype=torch.float16,

+ device_map="balanced",

+)

+

+model_score = 30

+prompt = "Evening, backlight, side lighting, soft light, high contrast, mid-shot, centered composition, clean solo shot, warm color. A young Caucasian man stands in a forest, golden light glimmers on his hair as sunlight filters through the leaves. He wears a light shirt, wind gently blowing his hair and collar, light dances across his face with his movements. The background is blurred, with dappled light and soft tree shadows in the distance. The camera focuses on his lifted gaze, clear and emotional."

+negative_prompt = "A chaotic sequence with misshapen, deformed limbs in heavy motion blur, sudden disappearance, jump cuts, jerky movements, rapid shot changes, frames out of sync, inconsistent character shapes, temporal artifacts, jitter, and ghosting effects, creating a disorienting visual experience."

+motion_prompt = f" motion score: {model_score}."

+prompt = prompt + motion_prompt

+

+output = pipeline(

+ prompt=prompt,

+ negative_prompt=negative_prompt,

+ height=480,

+ width=832,

+ num_frames=81,

+ guidance_scale=6.0,

+ num_inference_steps=50

+).frames[0]

+export_to_video(output, "sana-video-output.mp4", fps=16)

+```

+

+## SanaVideoPipeline

+

+[[autodoc]] SanaVideoPipeline

+ - all

+ - __call__

+

+

+## SanaImageToVideoPipeline

+

+[[autodoc]] SanaImageToVideoPipeline

+ - all

+ - __call__

+

+

+## SanaVideoPipelineOutput

+

+[[autodoc]] pipelines.sana_video.pipeline_sana_video.SanaVideoPipelineOutput

diff --git a/docs/source/en/api/pipelines/self_attention_guidance.md b/docs/source/en/api/pipelines/self_attention_guidance.md

index d656ce93f104..8d411598ae6d 100644

--- a/docs/source/en/api/pipelines/self_attention_guidance.md

+++ b/docs/source/en/api/pipelines/self_attention_guidance.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# Self-Attention Guidance

[Improving Sample Quality of Diffusion Models Using Self-Attention Guidance](https://huggingface.co/papers/2210.00939) is by Susung Hong et al.

@@ -20,11 +23,8 @@ The abstract from the paper is:

You can find additional information about Self-Attention Guidance on the [project page](https://ku-cvlab.github.io/Self-Attention-Guidance), [original codebase](https://github.com/KU-CVLAB/Self-Attention-Guidance), and try it out in a [demo](https://huggingface.co/spaces/susunghong/Self-Attention-Guidance) or [notebook](https://colab.research.google.com/github/SusungHong/Self-Attention-Guidance/blob/main/SAG_Stable.ipynb).

-

-

-Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

-

-

+> [!TIP]

+> Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

## StableDiffusionSAGPipeline

[[autodoc]] StableDiffusionSAGPipeline

diff --git a/docs/source/en/api/pipelines/semantic_stable_diffusion.md b/docs/source/en/api/pipelines/semantic_stable_diffusion.md

index b9aacd3518d8..dda428e80f8f 100644

--- a/docs/source/en/api/pipelines/semantic_stable_diffusion.md

+++ b/docs/source/en/api/pipelines/semantic_stable_diffusion.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# Semantic Guidance

Semantic Guidance for Diffusion Models was proposed in [SEGA: Instructing Text-to-Image Models using Semantic Guidance](https://huggingface.co/papers/2301.12247) and provides strong semantic control over image generation.

@@ -19,11 +22,8 @@ The abstract from the paper is:

*Text-to-image diffusion models have recently received a lot of interest for their astonishing ability to produce high-fidelity images from text only. However, achieving one-shot generation that aligns with the user's intent is nearly impossible, yet small changes to the input prompt often result in very different images. This leaves the user with little semantic control. To put the user in control, we show how to interact with the diffusion process to flexibly steer it along semantic directions. This semantic guidance (SEGA) generalizes to any generative architecture using classifier-free guidance. More importantly, it allows for subtle and extensive edits, changes in composition and style, as well as optimizing the overall artistic conception. We demonstrate SEGA's effectiveness on both latent and pixel-based diffusion models such as Stable Diffusion, Paella, and DeepFloyd-IF using a variety of tasks, thus providing strong evidence for its versatility, flexibility, and improvements over existing methods.*

-

-

-Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

-

-

+> [!TIP]

+> Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

## SemanticStableDiffusionPipeline

[[autodoc]] SemanticStableDiffusionPipeline

diff --git a/docs/source/en/api/pipelines/shap_e.md b/docs/source/en/api/pipelines/shap_e.md

index 3c1f939c1fce..3e505894ca80 100644

--- a/docs/source/en/api/pipelines/shap_e.md

+++ b/docs/source/en/api/pipelines/shap_e.md

@@ -1,4 +1,4 @@

-

+

+

+

+# SkyReels-V2: Infinite-length Film Generative model

+

+[SkyReels-V2](https://huggingface.co/papers/2504.13074) by the SkyReels Team from Skywork AI.

+

+*Recent advances in video generation have been driven by diffusion models and autoregressive frameworks, yet critical challenges persist in harmonizing prompt adherence, visual quality, motion dynamics, and duration: compromises in motion dynamics to enhance temporal visual quality, constrained video duration (5-10 seconds) to prioritize resolution, and inadequate shot-aware generation stemming from general-purpose MLLMs' inability to interpret cinematic grammar, such as shot composition, actor expressions, and camera motions. These intertwined limitations hinder realistic long-form synthesis and professional film-style generation. To address these limitations, we propose SkyReels-V2, an Infinite-length Film Generative Model, that synergizes Multi-modal Large Language Model (MLLM), Multi-stage Pretraining, Reinforcement Learning, and Diffusion Forcing Framework. Firstly, we design a comprehensive structural representation of video that combines the general descriptions by the Multi-modal LLM and the detailed shot language by sub-expert models. Aided with human annotation, we then train a unified Video Captioner, named SkyCaptioner-V1, to efficiently label the video data. Secondly, we establish progressive-resolution pretraining for the fundamental video generation, followed by a four-stage post-training enhancement: Initial concept-balanced Supervised Fine-Tuning (SFT) improves baseline quality; Motion-specific Reinforcement Learning (RL) training with human-annotated and synthetic distortion data addresses dynamic artifacts; Our diffusion forcing framework with non-decreasing noise schedules enables long-video synthesis in an efficient search space; Final high-quality SFT refines visual fidelity. All the code and models are available at [this https URL](https://github.com/SkyworkAI/SkyReels-V2).*

+

+You can find all the original SkyReels-V2 checkpoints under the [Skywork](https://huggingface.co/collections/Skywork/skyreels-v2-6801b1b93df627d441d0d0d9) organization.

+

+The following SkyReels-V2 models are supported in Diffusers:

+- [SkyReels-V2 DF 1.3B - 540P](https://huggingface.co/Skywork/SkyReels-V2-DF-1.3B-540P-Diffusers)

+- [SkyReels-V2 DF 14B - 540P](https://huggingface.co/Skywork/SkyReels-V2-DF-14B-540P-Diffusers)

+- [SkyReels-V2 DF 14B - 720P](https://huggingface.co/Skywork/SkyReels-V2-DF-14B-720P-Diffusers)

+- [SkyReels-V2 T2V 14B - 540P](https://huggingface.co/Skywork/SkyReels-V2-T2V-14B-540P-Diffusers)

+- [SkyReels-V2 T2V 14B - 720P](https://huggingface.co/Skywork/SkyReels-V2-T2V-14B-720P-Diffusers)

+- [SkyReels-V2 I2V 1.3B - 540P](https://huggingface.co/Skywork/SkyReels-V2-I2V-1.3B-540P-Diffusers)

+- [SkyReels-V2 I2V 14B - 540P](https://huggingface.co/Skywork/SkyReels-V2-I2V-14B-540P-Diffusers)

+- [SkyReels-V2 I2V 14B - 720P](https://huggingface.co/Skywork/SkyReels-V2-I2V-14B-720P-Diffusers)

+- [SkyReels-V2 FLF2V 1.3B - 540P](https://huggingface.co/Skywork/SkyReels-V2-FLF2V-1.3B-540P-Diffusers)

+

+> [!TIP]

+> Click on the SkyReels-V2 models in the right sidebar for more examples of video generation.

+

+### A _Visual_ Demonstration

+

+The example below has the following parameters:

+

+- `base_num_frames=97`

+- `num_frames=97`

+- `num_inference_steps=30`

+- `ar_step=5`

+- `causal_block_size=5`

+

+With `vae_scale_factor_temporal=4`, expect `5` blocks of `5` frames each as calculated by:

+

+`num_latent_frames: (97-1)//vae_scale_factor_temporal+1 = 25 frames -> 5 blocks of 5 frames each`

+

+And the maximum context length in the latent space is calculated with `base_num_latent_frames`:

+

+`base_num_latent_frames = (97-1)//vae_scale_factor_temporal+1 = 25 -> 25//5 = 5 blocks`

+

+Asynchronous Processing Timeline:

+```text

+┌─────────────────────────────────────────────────────────────────┐

+│ Steps: 1 6 11 16 21 26 31 36 41 46 50 │

+│ Block 1: [■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■] │

+│ Block 2: [■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■] │

+│ Block 3: [■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■] │

+│ Block 4: [■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■] │

+│ Block 5: [■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■] │

+└─────────────────────────────────────────────────────────────────┘

+```

+

+For Long Videos (`num_frames` > `base_num_frames`):

+`base_num_frames` acts as the "sliding window size" for processing long videos.

+

+Example: `257`-frame video with `base_num_frames=97`, `overlap_history=17`

+```text

+┌──── Iteration 1 (frames 1-97) ────┐

+│ Processing window: 97 frames │ → 5 blocks,

+│ Generates: frames 1-97 │ async processing

+└───────────────────────────────────┘

+ ┌────── Iteration 2 (frames 81-177) ──────┐

+ │ Processing window: 97 frames │

+ │ Overlap: 17 frames (81-97) from prev │ → 5 blocks,

+ │ Generates: frames 98-177 │ async processing

+ └─────────────────────────────────────────┘

+ ┌────── Iteration 3 (frames 161-257) ──────┐

+ │ Processing window: 97 frames │

+ │ Overlap: 17 frames (161-177) from prev │ → 5 blocks,

+ │ Generates: frames 178-257 │ async processing

+ └──────────────────────────────────────────┘

+```

+

+Each iteration independently runs the asynchronous processing with its own `5` blocks.

+`base_num_frames` controls:

+1. Memory usage (larger window = more VRAM)

+2. Model context length (must match training constraints)

+3. Number of blocks per iteration (`base_num_latent_frames // causal_block_size`)

+

+Each block takes `30` steps to complete denoising.

+Block N starts at step: `1 + (N-1) x ar_step`

+Total steps: `30 + (5-1) x 5 = 50` steps

+

+

+Synchronous mode (`ar_step=0`) would process all blocks/frames simultaneously:

+```text

+┌──────────────────────────────────────────────┐

+│ Steps: 1 ... 30 │

+│ All blocks: [■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■] │

+└──────────────────────────────────────────────┘

+```

+Total steps: `30` steps

+

+

+An example on how the step matrix is constructed for asynchronous processing:

+Given the parameters: (`num_inference_steps=30, flow_shift=8, num_frames=97, ar_step=5, causal_block_size=5`)

+```

+- num_latent_frames = (97 frames - 1) // (4 temporal downsampling) + 1 = 25

+- step_template = [999, 995, 991, 986, 980, 975, 969, 963, 956, 948,

+ 941, 932, 922, 912, 901, 888, 874, 859, 841, 822,

+ 799, 773, 743, 708, 666, 615, 551, 470, 363, 216]

+```

+

+The algorithm creates a `50x25` `step_matrix` where:

+```

+- Row 1: [999×5, 999×5, 999×5, 999×5, 999×5]

+- Row 2: [995×5, 999×5, 999×5, 999×5, 999×5]

+- Row 3: [991×5, 999×5, 999×5, 999×5, 999×5]

+- ...

+- Row 7: [969×5, 995×5, 999×5, 999×5, 999×5]

+- ...

+- Row 21: [799×5, 888×5, 941×5, 975×5, 999×5]

+- ...

+- Row 35: [ 0×5, 216×5, 666×5, 822×5, 901×5]

+- ...

+- Row 42: [ 0×5, 0×5, 0×5, 551×5, 773×5]

+- ...

+- Row 50: [ 0×5, 0×5, 0×5, 0×5, 216×5]

+```

+

+Detailed Row `6` Analysis:

+```

+- step_matrix[5]: [ 975×5, 999×5, 999×5, 999×5, 999×5]

+- step_index[5]: [ 6×5, 1×5, 0×5, 0×5, 0×5]

+- step_update_mask[5]: [True×5, True×5, False×5, False×5, False×5]

+- valid_interval[5]: (0, 25)

+```

+

+Key Pattern: Block `i` lags behind Block `i-1` by exactly `ar_step=5` timesteps, creating the

+staggered "diffusion forcing" effect where later blocks condition on cleaner earlier blocks.

+

+

+### Text-to-Video Generation

+

+The example below demonstrates how to generate a video from text.

+

+

+

+

+Refer to the [Reduce memory usage](../../optimization/memory) guide for more details about the various memory saving techniques.

+

+From the original repo:

+>You can use --ar_step 5 to enable asynchronous inference. When asynchronous inference, --causal_block_size 5 is recommended while it is not supposed to be set for synchronous generation... Asynchronous inference will take more steps to diffuse the whole sequence which means it will be SLOWER than synchronous mode. In our experiments, asynchronous inference may improve the instruction following and visual consistent performance.

+

+```py

+import torch

+from diffusers import AutoModel, SkyReelsV2DiffusionForcingPipeline, UniPCMultistepScheduler

+from diffusers.utils import export_to_video

+

+

+model_id = "Skywork/SkyReels-V2-DF-1.3B-540P-Diffusers"

+vae = AutoModel.from_pretrained(model_id, subfolder="vae", torch_dtype=torch.float32)

+

+pipeline = SkyReelsV2DiffusionForcingPipeline.from_pretrained(

+ model_id,

+ vae=vae,

+ torch_dtype=torch.bfloat16,

+)

+pipeline.to("cuda")

+flow_shift = 8.0 # 8.0 for T2V, 5.0 for I2V

+pipeline.scheduler = UniPCMultistepScheduler.from_config(pipeline.scheduler.config, flow_shift=flow_shift)

+

+prompt = "A cat and a dog baking a cake together in a kitchen. The cat is carefully measuring flour, while the dog is stirring the batter with a wooden spoon. The kitchen is cozy, with sunlight streaming through the window."

+

+output = pipeline(

+ prompt=prompt,

+ num_inference_steps=30,

+ height=544, # 720 for 720P

+ width=960, # 1280 for 720P

+ num_frames=97,

+ base_num_frames=97, # 121 for 720P

+ ar_step=5, # Controls asynchronous inference (0 for synchronous mode)

+ causal_block_size=5, # Number of frames in each block for asynchronous processing

+ overlap_history=None, # Number of frames to overlap for smooth transitions in long videos; 17 for long video generations

+ addnoise_condition=20, # Improves consistency in long video generation

+).frames[0]

+export_to_video(output, "video.mp4", fps=24, quality=8)

+```

+

+

+

+

+### First-Last-Frame-to-Video Generation

+

+The example below demonstrates how to use the image-to-video pipeline to generate a video using a text description, a starting frame, and an ending frame.

+

+

+

+

+```python

+import numpy as np

+import torch

+import torchvision.transforms.functional as TF

+from diffusers import AutoencoderKLWan, SkyReelsV2DiffusionForcingImageToVideoPipeline, UniPCMultistepScheduler

+from diffusers.utils import export_to_video, load_image

+

+

+model_id = "Skywork/SkyReels-V2-DF-1.3B-720P-Diffusers"

+vae = AutoencoderKLWan.from_pretrained(model_id, subfolder="vae", torch_dtype=torch.float32)

+pipeline = SkyReelsV2DiffusionForcingImageToVideoPipeline.from_pretrained(

+ model_id, vae=vae, torch_dtype=torch.bfloat16

+)

+pipeline.to("cuda")

+flow_shift = 5.0 # 8.0 for T2V, 5.0 for I2V

+pipeline.scheduler = UniPCMultistepScheduler.from_config(pipeline.scheduler.config, flow_shift=flow_shift)

+

+first_frame = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/flf2v_input_first_frame.png")

+last_frame = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/flf2v_input_last_frame.png")

+

+def aspect_ratio_resize(image, pipeline, max_area=720 * 1280):

+ aspect_ratio = image.height / image.width

+ mod_value = pipeline.vae_scale_factor_spatial * pipeline.transformer.config.patch_size[1]

+ height = round(np.sqrt(max_area * aspect_ratio)) // mod_value * mod_value

+ width = round(np.sqrt(max_area / aspect_ratio)) // mod_value * mod_value

+ image = image.resize((width, height))

+ return image, height, width

+

+def center_crop_resize(image, height, width):

+ # Calculate resize ratio to match first frame dimensions

+ resize_ratio = max(width / image.width, height / image.height)

+

+ # Resize the image

+ width = round(image.width * resize_ratio)

+ height = round(image.height * resize_ratio)

+ size = [width, height]

+ image = TF.center_crop(image, size)

+

+ return image, height, width

+

+first_frame, height, width = aspect_ratio_resize(first_frame, pipeline)

+if last_frame.size != first_frame.size:

+ last_frame, _, _ = center_crop_resize(last_frame, height, width)

+

+prompt = "CG animation style, a small blue bird takes off from the ground, flapping its wings. The bird's feathers are delicate, with a unique pattern on its chest. The background shows a blue sky with white clouds under bright sunshine. The camera follows the bird upward, capturing its flight and the vastness of the sky from a close-up, low-angle perspective."

+

+output = pipeline(

+ image=first_frame, last_image=last_frame, prompt=prompt, height=height, width=width, guidance_scale=5.0

+).frames[0]

+export_to_video(output, "video.mp4", fps=24, quality=8)

+```

+

+

+

+

+

+### Video-to-Video Generation

+

+

+

+

+`SkyReelsV2DiffusionForcingVideoToVideoPipeline` extends a given video.

+

+```python

+import numpy as np

+import torch

+import torchvision.transforms.functional as TF

+from diffusers import AutoencoderKLWan, SkyReelsV2DiffusionForcingVideoToVideoPipeline, UniPCMultistepScheduler

+from diffusers.utils import export_to_video, load_video

+

+

+model_id = "Skywork/SkyReels-V2-DF-1.3B-720P-Diffusers"

+vae = AutoencoderKLWan.from_pretrained(model_id, subfolder="vae", torch_dtype=torch.float32)

+pipeline = SkyReelsV2DiffusionForcingVideoToVideoPipeline.from_pretrained(

+ model_id, vae=vae, torch_dtype=torch.bfloat16

+)

+pipeline.to("cuda")

+flow_shift = 5.0 # 8.0 for T2V, 5.0 for I2V

+pipeline.scheduler = UniPCMultistepScheduler.from_config(pipeline.scheduler.config, flow_shift=flow_shift)

+

+video = load_video("input_video.mp4")

+

+prompt = "CG animation style, a small blue bird takes off from the ground, flapping its wings. The bird's feathers are delicate, with a unique pattern on its chest. The background shows a blue sky with white clouds under bright sunshine. The camera follows the bird upward, capturing its flight and the vastness of the sky from a close-up, low-angle perspective."

+

+output = pipeline(

+ video=video, prompt=prompt, height=720, width=1280, guidance_scale=5.0, overlap_history=17,

+ num_inference_steps=30, num_frames=257, base_num_frames=121#, ar_step=5, causal_block_size=5,

+).frames[0]

+export_to_video(output, "video.mp4", fps=24, quality=8)

+# Total frames will be the number of frames of the given video + 257

+```

+

+

+

+

+## Notes

+

+- SkyReels-V2 supports LoRAs with [`~loaders.SkyReelsV2LoraLoaderMixin.load_lora_weights`].

+

+`SkyReelsV2Pipeline` and `SkyReelsV2ImageToVideoPipeline` are also available without Diffusion Forcing framework applied.

+

+

+## SkyReelsV2DiffusionForcingPipeline

+

+[[autodoc]] SkyReelsV2DiffusionForcingPipeline

+ - all

+ - __call__

+

+## SkyReelsV2DiffusionForcingImageToVideoPipeline

+

+[[autodoc]] SkyReelsV2DiffusionForcingImageToVideoPipeline

+ - all

+ - __call__

+

+## SkyReelsV2DiffusionForcingVideoToVideoPipeline

+

+[[autodoc]] SkyReelsV2DiffusionForcingVideoToVideoPipeline

+ - all

+ - __call__

+

+## SkyReelsV2Pipeline

+

+[[autodoc]] SkyReelsV2Pipeline

+ - all

+ - __call__

+

+## SkyReelsV2ImageToVideoPipeline

+

+[[autodoc]] SkyReelsV2ImageToVideoPipeline

+ - all

+ - __call__

+

+## SkyReelsV2PipelineOutput

+

+[[autodoc]] pipelines.skyreels_v2.pipeline_output.SkyReelsV2PipelineOutput

diff --git a/docs/source/en/api/pipelines/stable_audio.md b/docs/source/en/api/pipelines/stable_audio.md

index 1acb72b3968a..82763a52a942 100644

--- a/docs/source/en/api/pipelines/stable_audio.md

+++ b/docs/source/en/api/pipelines/stable_audio.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# GLIGEN (Grounded Language-to-Image Generation)

The GLIGEN model was created by researchers and engineers from [University of Wisconsin-Madison, Columbia University, and Microsoft](https://github.com/gligen/GLIGEN). The [`StableDiffusionGLIGENPipeline`] and [`StableDiffusionGLIGENTextImagePipeline`] can generate photorealistic images conditioned on grounding inputs. Along with text and bounding boxes with [`StableDiffusionGLIGENPipeline`], if input images are given, [`StableDiffusionGLIGENTextImagePipeline`] can insert objects described by text at the region defined by bounding boxes. Otherwise, it'll generate an image described by the caption/prompt and insert objects described by text at the region defined by bounding boxes. It's trained on COCO2014D and COCO2014CD datasets, and the model uses a frozen CLIP ViT-L/14 text encoder to condition itself on grounding inputs.

@@ -18,13 +21,10 @@ The abstract from the [paper](https://huggingface.co/papers/2301.07093) is:

*Large-scale text-to-image diffusion models have made amazing advances. However, the status quo is to use text input alone, which can impede controllability. In this work, we propose GLIGEN, Grounded-Language-to-Image Generation, a novel approach that builds upon and extends the functionality of existing pre-trained text-to-image diffusion models by enabling them to also be conditioned on grounding inputs. To preserve the vast concept knowledge of the pre-trained model, we freeze all of its weights and inject the grounding information into new trainable layers via a gated mechanism. Our model achieves open-world grounded text2img generation with caption and bounding box condition inputs, and the grounding ability generalizes well to novel spatial configurations and concepts. GLIGEN’s zeroshot performance on COCO and LVIS outperforms existing supervised layout-to-image baselines by a large margin.*

-

-

-Make sure to check out the Stable Diffusion [Tips](https://huggingface.co/docs/diffusers/en/api/pipelines/stable_diffusion/overview#tips) section to learn how to explore the tradeoff between scheduler speed and quality and how to reuse pipeline components efficiently!

-

-If you want to use one of the official checkpoints for a task, explore the [gligen](https://huggingface.co/gligen) Hub organizations!

-

-

+> [!TIP]

+> Make sure to check out the Stable Diffusion [Tips](https://huggingface.co/docs/diffusers/en/api/pipelines/stable_diffusion/overview#tips) section to learn how to explore the tradeoff between scheduler speed and quality and how to reuse pipeline components efficiently!

+>

+> If you want to use one of the official checkpoints for a task, explore the [gligen](https://huggingface.co/gligen) Hub organizations!

[`StableDiffusionGLIGENPipeline`] was contributed by [Nikhil Gajendrakumar](https://github.com/nikhil-masterful) and [`StableDiffusionGLIGENTextImagePipeline`] was contributed by [Nguyễn Công Tú Anh](https://github.com/tuanh123789).

diff --git a/docs/source/en/api/pipelines/stable_diffusion/image_variation.md b/docs/source/en/api/pipelines/stable_diffusion/image_variation.md

index 57dd2f0d5b39..b1b7146b336f 100644

--- a/docs/source/en/api/pipelines/stable_diffusion/image_variation.md

+++ b/docs/source/en/api/pipelines/stable_diffusion/image_variation.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# K-Diffusion

[k-diffusion](https://github.com/crowsonkb/k-diffusion) is a popular library created by [Katherine Crowson](https://github.com/crowsonkb/). We provide `StableDiffusionKDiffusionPipeline` and `StableDiffusionXLKDiffusionPipeline` that allow you to run Stable DIffusion with samplers from k-diffusion.

diff --git a/docs/source/en/api/pipelines/stable_diffusion/latent_upscale.md b/docs/source/en/api/pipelines/stable_diffusion/latent_upscale.md

index 9abccd6e1347..19eae9a9ce44 100644

--- a/docs/source/en/api/pipelines/stable_diffusion/latent_upscale.md

+++ b/docs/source/en/api/pipelines/stable_diffusion/latent_upscale.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# Text-to-(RGB, depth)

@@ -19,7 +22,7 @@ specific language governing permissions and limitations under the License.

LDM3D was proposed in [LDM3D: Latent Diffusion Model for 3D](https://huggingface.co/papers/2305.10853) by Gabriela Ben Melech Stan, Diana Wofk, Scottie Fox, Alex Redden, Will Saxton, Jean Yu, Estelle Aflalo, Shao-Yen Tseng, Fabio Nonato, Matthias Muller, and Vasudev Lal. LDM3D generates an image and a depth map from a given text prompt unlike the existing text-to-image diffusion models such as [Stable Diffusion](./overview) which only generates an image. With almost the same number of parameters, LDM3D achieves to create a latent space that can compress both the RGB images and the depth maps.

Two checkpoints are available for use:

-- [ldm3d-original](https://huggingface.co/Intel/ldm3d). The original checkpoint used in the [paper](https://arxiv.org/pdf/2305.10853.pdf)

+- [ldm3d-original](https://huggingface.co/Intel/ldm3d). The original checkpoint used in the [paper](https://huggingface.co/papers/2305.10853)

- [ldm3d-4c](https://huggingface.co/Intel/ldm3d-4c). The new version of LDM3D using 4 channels inputs instead of 6-channels inputs and finetuned on higher resolution images.

@@ -27,11 +30,8 @@ The abstract from the paper is:

*This research paper proposes a Latent Diffusion Model for 3D (LDM3D) that generates both image and depth map data from a given text prompt, allowing users to generate RGBD images from text prompts. The LDM3D model is fine-tuned on a dataset of tuples containing an RGB image, depth map and caption, and validated through extensive experiments. We also develop an application called DepthFusion, which uses the generated RGB images and depth maps to create immersive and interactive 360-degree-view experiences using TouchDesigner. This technology has the potential to transform a wide range of industries, from entertainment and gaming to architecture and design. Overall, this paper presents a significant contribution to the field of generative AI and computer vision, and showcases the potential of LDM3D and DepthFusion to revolutionize content creation and digital experiences. A short video summarizing the approach can be found at [this url](https://t.ly/tdi2).*

-

-

-Make sure to check out the Stable Diffusion [Tips](overview#tips) section to learn how to explore the tradeoff between scheduler speed and quality, and how to reuse pipeline components efficiently!

-

-

+> [!TIP]

+> Make sure to check out the Stable Diffusion [Tips](overview#tips) section to learn how to explore the tradeoff between scheduler speed and quality, and how to reuse pipeline components efficiently!

## StableDiffusionLDM3DPipeline

@@ -48,7 +48,7 @@ Make sure to check out the Stable Diffusion [Tips](overview#tips) section to lea

# Upscaler

-[LDM3D-VR](https://arxiv.org/pdf/2311.03226.pdf) is an extended version of LDM3D.

+[LDM3D-VR](https://huggingface.co/papers/2311.03226) is an extended version of LDM3D.

The abstract from the paper is:

*Latent diffusion models have proven to be state-of-the-art in the creation and manipulation of visual outputs. However, as far as we know, the generation of depth maps jointly with RGB is still limited. We introduce LDM3D-VR, a suite of diffusion models targeting virtual reality development that includes LDM3D-pano and LDM3D-SR. These models enable the generation of panoramic RGBD based on textual prompts and the upscaling of low-resolution inputs to high-resolution RGBD, respectively. Our models are fine-tuned from existing pretrained models on datasets containing panoramic/high-resolution RGB images, depth maps and captions. Both models are evaluated in comparison to existing related methods*

diff --git a/docs/source/en/api/pipelines/stable_diffusion/overview.md b/docs/source/en/api/pipelines/stable_diffusion/overview.md

index 25984091215c..2d2de39c91a8 100644

--- a/docs/source/en/api/pipelines/stable_diffusion/overview.md

+++ b/docs/source/en/api/pipelines/stable_diffusion/overview.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# Safe Stable Diffusion

Safe Stable Diffusion was proposed in [Safe Latent Diffusion: Mitigating Inappropriate Degeneration in Diffusion Models](https://huggingface.co/papers/2211.05105) and mitigates inappropriate degeneration from Stable Diffusion models because they're trained on unfiltered web-crawled datasets. For instance Stable Diffusion may unexpectedly generate nudity, violence, images depicting self-harm, and otherwise offensive content. Safe Stable Diffusion is an extension of Stable Diffusion that drastically reduces this type of content.

@@ -42,11 +45,8 @@ There are 4 configurations (`SafetyConfig.WEAK`, `SafetyConfig.MEDIUM`, `SafetyC

>>> out = pipeline(prompt=prompt, **SafetyConfig.MAX)

```

-

-

-Make sure to check out the Stable Diffusion [Tips](overview#tips) section to learn how to explore the tradeoff between scheduler speed and quality, and how to reuse pipeline components efficiently!

-

-

+> [!TIP]

+> Make sure to check out the Stable Diffusion [Tips](overview#tips) section to learn how to explore the tradeoff between scheduler speed and quality, and how to reuse pipeline components efficiently!

## StableDiffusionPipelineSafe

diff --git a/docs/source/en/api/pipelines/stable_diffusion/stable_diffusion_xl.md b/docs/source/en/api/pipelines/stable_diffusion/stable_diffusion_xl.md

index 485ee7d7fc28..6863d408b5fd 100644

--- a/docs/source/en/api/pipelines/stable_diffusion/stable_diffusion_xl.md

+++ b/docs/source/en/api/pipelines/stable_diffusion/stable_diffusion_xl.md

@@ -1,4 +1,4 @@

-

-

-

-🧪 This pipeline is for research purposes only.

-

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

# Text-to-video

@@ -22,7 +19,7 @@ specific language governing permissions and limitations under the License.

-[ModelScope Text-to-Video Technical Report](https://arxiv.org/abs/2308.06571) is by Jiuniu Wang, Hangjie Yuan, Dayou Chen, Yingya Zhang, Xiang Wang, Shiwei Zhang.

+[ModelScope Text-to-Video Technical Report](https://huggingface.co/papers/2308.06571) is by Jiuniu Wang, Hangjie Yuan, Dayou Chen, Yingya Zhang, Xiang Wang, Shiwei Zhang.

The abstract from the paper is:

@@ -175,13 +172,10 @@ Here are some sample outputs:

Video generation is memory-intensive and one way to reduce your memory usage is to set `enable_forward_chunking` on the pipeline's UNet so you don't run the entire feedforward layer at once. Breaking it up into chunks in a loop is more efficient.

-Check out the [Text or image-to-video](text-img2vid) guide for more details about how certain parameters can affect video generation and how to optimize inference by reducing memory usage.

-

-

-

-Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

+Check out the [Text or image-to-video](../../using-diffusers/text-img2vid) guide for more details about how certain parameters can affect video generation and how to optimize inference by reducing memory usage.

-

+> [!TIP]

+> Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

## TextToVideoSDPipeline

[[autodoc]] TextToVideoSDPipeline

diff --git a/docs/source/en/api/pipelines/text_to_video_zero.md b/docs/source/en/api/pipelines/text_to_video_zero.md

index 44d9a6670af4..50e7620760f3 100644

--- a/docs/source/en/api/pipelines/text_to_video_zero.md

+++ b/docs/source/en/api/pipelines/text_to_video_zero.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# Text2Video-Zero

@@ -34,7 +37,7 @@ Our key modifications include (i) enriching the latent codes of the generated fr

Experiments show that this leads to low overhead, yet high-quality and remarkably consistent video generation. Moreover, our approach is not limited to text-to-video synthesis but is also applicable to other tasks such as conditional and content-specialized video generation, and Video Instruct-Pix2Pix, i.e., instruction-guided video editing.

As experiments show, our method performs comparably or sometimes better than recent approaches, despite not being trained on additional video data.*

-You can find additional information about Text2Video-Zero on the [project page](https://text2video-zero.github.io/), [paper](https://arxiv.org/abs/2303.13439), and [original codebase](https://github.com/Picsart-AI-Research/Text2Video-Zero).

+You can find additional information about Text2Video-Zero on the [project page](https://text2video-zero.github.io/), [paper](https://huggingface.co/papers/2303.13439), and [original codebase](https://github.com/Picsart-AI-Research/Text2Video-Zero).

## Usage example

@@ -55,9 +58,9 @@ result = [(r * 255).astype("uint8") for r in result]

imageio.mimsave("video.mp4", result, fps=4)

```

You can change these parameters in the pipeline call:

-* Motion field strength (see the [paper](https://arxiv.org/abs/2303.13439), Sect. 3.3.1):

+* Motion field strength (see the [paper](https://huggingface.co/papers/2303.13439), Sect. 3.3.1):

* `motion_field_strength_x` and `motion_field_strength_y`. Default: `motion_field_strength_x=12`, `motion_field_strength_y=12`

-* `T` and `T'` (see the [paper](https://arxiv.org/abs/2303.13439), Sect. 3.3.1)

+* `T` and `T'` (see the [paper](https://huggingface.co/papers/2303.13439), Sect. 3.3.1)

* `t0` and `t1` in the range `{0, ..., num_inference_steps}`. Default: `t0=45`, `t1=48`

* Video length:

* `video_length`, the number of frames video_length to be generated. Default: `video_length=8`

@@ -286,11 +289,8 @@ can run with custom [DreamBooth](../../training/dreambooth) models, as shown bel

You can filter out some available DreamBooth-trained models with [this link](https://huggingface.co/models?search=dreambooth).

-

-

-Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

-

-

+> [!TIP]

+> Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

## TextToVideoZeroPipeline

[[autodoc]] TextToVideoZeroPipeline

diff --git a/docs/source/en/api/pipelines/unclip.md b/docs/source/en/api/pipelines/unclip.md

index 943cebdb28a2..7c5c2b0d9ab9 100644

--- a/docs/source/en/api/pipelines/unclip.md

+++ b/docs/source/en/api/pipelines/unclip.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# unCLIP

[Hierarchical Text-Conditional Image Generation with CLIP Latents](https://huggingface.co/papers/2204.06125) is by Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, Mark Chen. The unCLIP model in 🤗 Diffusers comes from kakaobrain's [karlo](https://github.com/kakaobrain/karlo).

@@ -17,11 +20,8 @@ The abstract from the paper is following:

You can find lucidrains' DALL-E 2 recreation at [lucidrains/DALLE2-pytorch](https://github.com/lucidrains/DALLE2-pytorch).

-

-

-Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

-

-

+> [!TIP]

+> Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

## UnCLIPPipeline

[[autodoc]] UnCLIPPipeline

diff --git a/docs/source/en/api/pipelines/unidiffuser.md b/docs/source/en/api/pipelines/unidiffuser.md

index 802aefea6be5..2ff700e4b8be 100644

--- a/docs/source/en/api/pipelines/unidiffuser.md

+++ b/docs/source/en/api/pipelines/unidiffuser.md

@@ -1,4 +1,4 @@

-

+> [!WARNING]

+> This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

+

# UniDiffuser

@@ -24,11 +27,8 @@ The abstract from the paper is:

You can find the original codebase at [thu-ml/unidiffuser](https://github.com/thu-ml/unidiffuser) and additional checkpoints at [thu-ml](https://huggingface.co/thu-ml).

-

-

-There is currently an issue on PyTorch 1.X where the output images are all black or the pixel values become `NaNs`. This issue can be mitigated by switching to PyTorch 2.X.

-

-

+> [!WARNING]

+> There is currently an issue on PyTorch 1.X where the output images are all black or the pixel values become `NaNs`. This issue can be mitigated by switching to PyTorch 2.X.

This pipeline was contributed by [dg845](https://github.com/dg845). ❤️

@@ -194,11 +194,8 @@ final_prompt = sample.text[0]

print(final_prompt)

```

-

-

-Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

-

-

+> [!TIP]

+> Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

## UniDiffuserPipeline

[[autodoc]] UniDiffuserPipeline

diff --git a/docs/source/en/api/pipelines/value_guided_sampling.md b/docs/source/en/api/pipelines/value_guided_sampling.md

index 5aaee9090cef..d050ea309ca5 100644

--- a/docs/source/en/api/pipelines/value_guided_sampling.md

+++ b/docs/source/en/api/pipelines/value_guided_sampling.md

@@ -1,4 +1,4 @@

-

+

+# VisualCloze

+

+[VisualCloze: A Universal Image Generation Framework via Visual In-Context Learning](https://huggingface.co/papers/2504.07960) is an innovative in-context learning based universal image generation framework that offers key capabilities:

+1. Support for various in-domain tasks

+2. Generalization to unseen tasks through in-context learning

+3. Unify multiple tasks into one step and generate both target image and intermediate results

+4. Support reverse-engineering conditions from target images

+

+## Overview

+

+The abstract from the paper is:

+

+*Recent progress in diffusion models significantly advances various image generation tasks. However, the current mainstream approach remains focused on building task-specific models, which have limited efficiency when supporting a wide range of different needs. While universal models attempt to address this limitation, they face critical challenges, including generalizable task instruction, appropriate task distributions, and unified architectural design. To tackle these challenges, we propose VisualCloze, a universal image generation framework, which supports a wide range of in-domain tasks, generalization to unseen ones, unseen unification of multiple tasks, and reverse generation. Unlike existing methods that rely on language-based task instruction, leading to task ambiguity and weak generalization, we integrate visual in-context learning, allowing models to identify tasks from visual demonstrations. Meanwhile, the inherent sparsity of visual task distributions hampers the learning of transferable knowledge across tasks. To this end, we introduce Graph200K, a graph-structured dataset that establishes various interrelated tasks, enhancing task density and transferable knowledge. Furthermore, we uncover that our unified image generation formulation shared a consistent objective with image infilling, enabling us to leverage the strong generative priors of pre-trained infilling models without modifying the architectures. The codes, dataset, and models are available at https://visualcloze.github.io.*

+

+## Inference

+

+### Model loading

+

+VisualCloze is a two-stage cascade pipeline, containing `VisualClozeGenerationPipeline` and `VisualClozeUpsamplingPipeline`.

+- In `VisualClozeGenerationPipeline`, each image is downsampled before concatenating images into a grid layout, avoiding excessively high resolutions. VisualCloze releases two models suitable for diffusers, i.e., [VisualClozePipeline-384](https://huggingface.co/VisualCloze/VisualClozePipeline-384) and [VisualClozePipeline-512](https://huggingface.co/VisualCloze/VisualClozePipeline-384), which downsample images to resolutions of 384 and 512, respectively.

+- `VisualClozeUpsamplingPipeline` uses [SDEdit](https://huggingface.co/papers/2108.01073) to enable high-resolution image synthesis.

+

+The `VisualClozePipeline` integrates both stages to support convenient end-to-end sampling, while also allowing users to utilize each pipeline independently as needed.

+

+### Input Specifications

+

+#### Task and Content Prompts

+- Task prompt: Required to describe the generation task intention

+- Content prompt: Optional description or caption of the target image

+- When content prompt is not needed, pass `None`

+- For batch inference, pass `List[str|None]`

+

+#### Image Input Format

+- Format: `List[List[Image|None]]`

+- Structure:

+ - All rows except the last represent in-context examples

+ - Last row represents the current query (target image set to `None`)

+- For batch inference, pass `List[List[List[Image|None]]]`

+

+#### Resolution Control

+- Default behavior:

+ - Initial generation in the first stage: area of ${pipe.resolution}^2$

+ - Upsampling in the second stage: 3x factor

+- Custom resolution: Adjust using `upsampling_height` and `upsampling_width` parameters

+

+### Examples

+

+For comprehensive examples covering a wide range of tasks, please refer to the [Online Demo](https://huggingface.co/spaces/VisualCloze/VisualCloze) and [GitHub Repository](https://github.com/lzyhha/VisualCloze). Below are simple examples for three cases: mask-to-image conversion, edge detection, and subject-driven generation.

+

+#### Example for mask2image

+

+```python

+import torch

+from diffusers import VisualClozePipeline

+from diffusers.utils import load_image

+

+pipe = VisualClozePipeline.from_pretrained("VisualCloze/VisualClozePipeline-384", resolution=384, torch_dtype=torch.bfloat16)

+pipe.to("cuda")

+

+# Load in-context images (make sure the paths are correct and accessible)

+image_paths = [

+ # in-context examples

+ [

+ load_image('https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/visualcloze/visualcloze_mask2image_incontext-example-1_mask.jpg'),

+ load_image('https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/visualcloze/visualcloze_mask2image_incontext-example-1_image.jpg'),

+ ],

+ # query with the target image

+ [

+ load_image('https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/visualcloze/visualcloze_mask2image_query_mask.jpg'),

+ None, # No image needed for the target image

+ ],

+]

+

+# Task and content prompt

+task_prompt = "In each row, a logical task is demonstrated to achieve [IMAGE2] an aesthetically pleasing photograph based on [IMAGE1] sam 2-generated masks with rich color coding."

+content_prompt = """Majestic photo of a golden eagle perched on a rocky outcrop in a mountainous landscape.

+The eagle is positioned in the right foreground, facing left, with its sharp beak and keen eyes prominently visible.

+Its plumage is a mix of dark brown and golden hues, with intricate feather details.

+The background features a soft-focus view of snow-capped mountains under a cloudy sky, creating a serene and grandiose atmosphere.

+The foreground includes rugged rocks and patches of green moss. Photorealistic, medium depth of field,

+soft natural lighting, cool color palette, high contrast, sharp focus on the eagle, blurred background,

+tranquil, majestic, wildlife photography."""

+

+# Run the pipeline

+image_result = pipe(